The independent source for health policy research, polling, and news.

VOLUME 10

Distrust in Food Safety and Social Media Content Moderation

This is Irving Washington and Hagere Yilma. We direct KFF’s Health Misinformation and Trust Initiative and on behalf of all of our colleagues across KFF who work on misinformation and trust we are pleased to bring you this edition of our bi-weekly Monitor.

Summary

This volume addresses rising distrust in food safety, as concerns about food recalls after potential listeria outbreaks and artificial food dyes erode trust in the USDA and the FDA. We also examine shifts in social media content moderation, highlighting the tension between regulating harmful misinformation and protecting First Amendment rights in the recent elections, and the trend of self-diagnosis and treatment based on social media videos.

Recent Developments

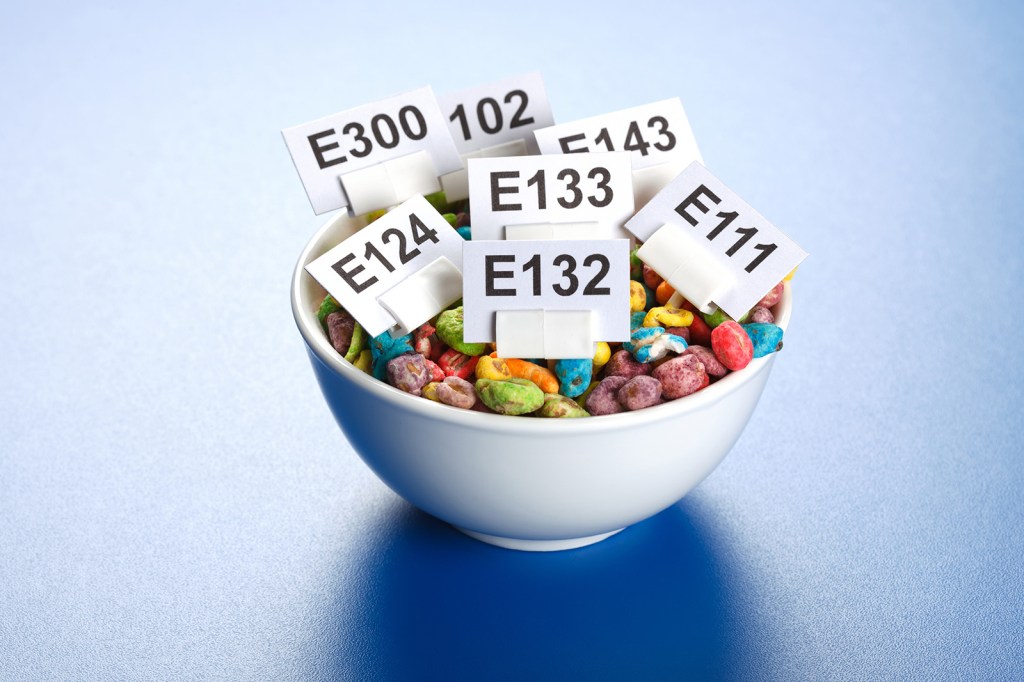

A Growing Distrust in Food Safety May Give Rise to Misinformation

Concerns about artificial dyes in the food supply have recently gained traction, with growing public suspicion that these dyes contribute to health problems, such as ADHD in children and other chronic diseases into adulthood. Protests at Kellogg’s headquarters and the recent California law banning six of these dyes in school meals reflect increasing public concern, especially among parents. While some studies suggest that synthetic food dyes may be linked to adverse health effects, the FDA currently deems these dyes safe for consumption at the levels used in foods, leading some to question the agency’s standards. Robert F. Kennedy Jr.’s “Make America Healthy Again” campaign, which promised to “clean up” the FDA and other public health agencies, tapped into this distrust and may have resonated with those who feel the FDA is no longer a reliable authority on food safety.

Recent food recalls have also fueled misconceptions about food safety, with some social media posts falsely claiming that government agencies are intentionally “poisoning” the U.S. food supply to spread illness. This response followed the USDA’s October 9 recall of millions of pounds of ready-to-eat meat potentially contaminated with listeria. In the month following the recall, mentions of food safety concerns surged in news articles and on social media, with about 1 million mentions overall and 19,000 specific mentions of BrucePac. The most widely viewed post on X, posted on October 16 and receiving over 439,000 views, alleged that “elites” and the FDA are deliberately contaminating food with bacteria, GMOs, and heavy metals. Many commenters echoed the unsubstantiated claim that government agencies and corporations are “intentionally poisoning” the public, despite recalls being triggered by FDA safety violations. The reemergence of unverified claims about food safety underscores the challenge of addressing public concerns in an environment where misinformation can quickly spread and influence perceptions.

Unregulated Health Advice on Social Media Promotes Self-Diagnosis and Treatment of Health Conditions

Social media has increasingly become a platform for deceptive health and wellness advertisements, sometimes using deepfake technology to feature celebrities or scientists endorsing non-FDA-approved products. From over-the-counter hearing aid scams to fake insomnia pills, unregulated and potentially dangerous medical products misrepresented to the public can confuse consumers about the safety of these treatments. Unverified rumors about health and wellness can also come from social media users who share their personal experiences navigating health and wellness. These posts are often motivated by a desire to help or connect with others, but some influential users stand to financially benefit from promoting false or misleading information about health behaviors or wellness products. Regardless of intent, false, unverified, or misleading information shared in viral videos has led to an increase in self-diagnosis and treatment of health conditions.

One example is the growing trend of self-diagnosing mental health conditions on social media, particularly on platforms like TikTok. Some users claim to have been misdiagnosed or overlooked by healthcare providers, fueling their desire to share their experiences online. Influencers with large followings and personal brands often contribute to this trend, sometimes in pursuit of financial or social gain. This can create a cycle of content that encourages self-diagnosis and the use of non-FDA-approved or non-prescribed treatments. While social media content may be seen as quick and accessible advice when professional mental health care is difficult to access, research shows that many popular mental health videos contain misleading or oversimplified information, potentially leading users to misinterpret common symptoms as serious conditions such as ADHD or anxiety. Psychologists warn that self-diagnosis based on such content can overlook complex causes or lead to inappropriate treatment attempts. Some experts suggest that educational psychologists can combat misinformation in this area by working with young people and sharing evidence-based content on platforms like TikTok.

Polling Insights:

KFF’s May Health Information Tracking Poll found that most adults who use TikTok report seeing health-related content on the app. While four in ten TikTok users say they trust the information about health issues they see on TikTok at least “somewhat,” far fewer say they have ever talked to a doctor (13%) or sought mental health treatment (12%) because of something they saw on the app (Figure 1). Among adults who use TikTok, those who are younger, women, or daily users are more likely to say they’ve talked to a doctor or sought mental health treatment at least in part because of something they saw on the app. Nonetheless, across these demographics, most users say they have not followed up with a doctor or sought mental health treatment due to content they’ve seen on TikTok.

Elections Spark Ongoing Content Moderation Challenges for Social Media Companies

The way social media companies handled harmful information leading up to the recent election has had a lasting impact on debates about balancing content moderation with First Amendment rights. Social media companies have been under scrutiny for failing to remove false or misleading election-related posts, but efforts to take down potentially harmful content often lead to accusations of censorship. For example, Meta faced criticism from its oversight board for stifling political speech when it removed a post depicting Vice President Kamala Harris and former running mate Governor Tim Waltz as characters from the movie Dumb and Dumber.

A broader Republican-led narrative suggesting that the federal government is being weaponized to censor conservative viewpoints has further politicized terms like “misinformation” and created distrust in government efforts to identify and reduce harmful information on social media. As a result, even government efforts to increase transparency around content moderation also face resistance from platforms. This was seen in California, where a lawsuit brought by Elon Musk claims that the state violated free speech by requiring social media companies to publicly disclose their content moderation policies. Concerns about restrictions on free speech on social media were central to several cases the Supreme Court considered this year, but the Court did not provide definitive guidance on how moderation affects First Amendment rights.

These ongoing tensions between platforms, politicians, and regulators suggest that finding the balance between free speech and content moderation will likely remain one of social media's most contentious challenges, leaving a murky area for future policy efforts.

Research Insights

Equipping TikTok Influencers with Training Boosts Accurate Mental Health Communication

A study published in Scientific Reports earlier this year explored how TikTok influencers can be encouraged to share evidence-based mental health information, with the aim of improving mental health communication on the platform. The researchers found that TikTok creators who were provided with digital toolkits and optional live training sessions were more likely to produce videos with accurate mental health information. Creators who used these resources also saw a boost in viewership of their mental-health related videos, suggesting that scalable training for influencers can combat misinformation in a manner acceptable to viewers.

Source: Motta, M., Liu, Y., & Yarnell, A. (2024). “Influencing the influencers:” a field experimental approach to promoting effective mental health communication on TikTok. Scientific Reports, 14(1), 5864.

Personal Role Models on Social Media Drive Stronger Health Motivation Through Relatability

A study in Health Communication examined how personal and entertainment role models influence people's health motivation, particularly through social media. The researchers found that people who followed someone on social media that they consider a role model have stronger health motivations, with personal role models, like family and friends, having a greater impact on health goals than entertainment figures. Among the qualities that made role models effective in motivating health behaviors, the researchers found that perceived similarity was the strongest, as people felt more inspired by role models who seemed relatable. The findings suggest that health campaigns should leverage personal connections and relatable influencers to inspire healthier behaviors.

Source: O’Donnell, N. H., Erlichman, S., & Nickerson, C. G. (2024). Health Motivation in the Influencer Era: Analyzing Entertainment, Personal, and Social Media Role Models. Health Communication, 1-12.

AI and Emerging Technology

Study Finds Low Trust in AI for Health Information Among Older Adults

While some have found beneficial uses for AI in health information, such as debunking conspiracy theories or providing quick, low-stakes health advice, a significant portion of the population remains skeptical. A University of Michigan National Poll on Healthy Aging found that nearly three-quarters of adults over 50 have little to no trust in AI-generated health information. This distrust is particularly pronounced among women, individuals with lower education or income, and those who haven't recently seen a healthcare provider. Additionally, 20% of respondents reported low confidence in identifying health misinformation. Older adults in poorer physical or mental health faced even greater challenges in finding accurate information. The findings from this report suggest a gap in health literacy among older adults that can be addressed by health professionals.

Support for the Misinformation and Trust initiative is provided by the Robert Wood Johnson Foundation (RWJF). The views expressed do not necessarily reflect the views of RWJF and KFF maintains full editorial control over all of its policy analysis, polling, and journalism activities. The Public Good Projects (PGP) provides media monitoring data KFF uses in producing the Monitor.